Radar + Camera Data Fusion in KITTI

KITTI Data

The KITTI dataset offers a variety of data, and here we have selected the raw_data for fusion. You can download the data from: http://www.cvlibs.net/datasets/kitti/raw_data.php

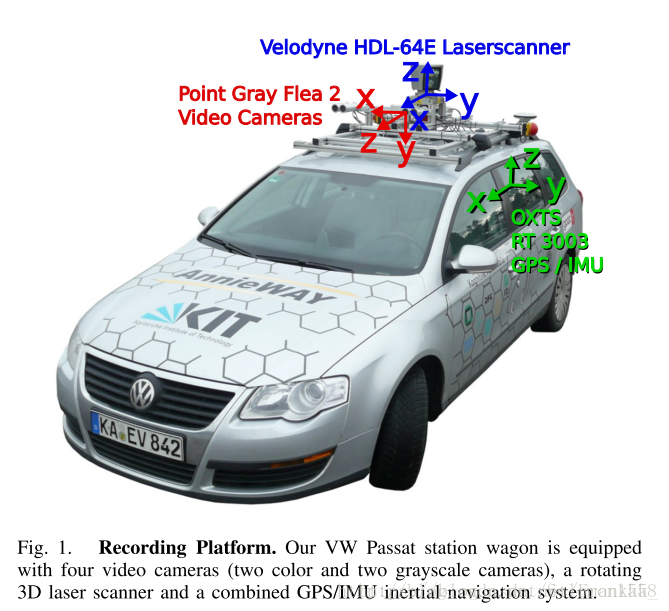

The data collection platform of the KITTI dataset is equipped with 2 grayscale cameras, 2 color cameras, a Velodyne 64-line 3D laser scanner, 4 optical lenses, and a GPS navigation system. The specific sensor parameters are as follows:

- 2 × PointGray Flea2 grayscale cameras (FL2-14S3M-C), 1.4 Megapixels, 1/2” Sony ICX267 CCD, global shutter

- 2 × PointGray Flea2 color cameras (FL2-14S3C-C), 1.4 Megapixels, 1/2” Sony ICX267 CCD, global shutter

- 4 × Edmund Optics lenses, 4mm, opening angle ∼ 90◦, vertical opening angle of region of interest (ROI) ∼ 35◦

- 1 × Velodyne HDL-64E rotating 3D laser scanner, 10 Hz, 64 beams, 0.09 degree angular resolution, 2 cm distance accuracy, collecting ∼ 1.3 million points/second, field of view: 360◦ horizontal, 26.8◦ vertical, range: 120 m

- 1 × OXTS RT3003 inertial and GPS navigation system, 6 axis, 100 Hz, L1/L2 RTK, resolution: 0.02m / 0.1◦

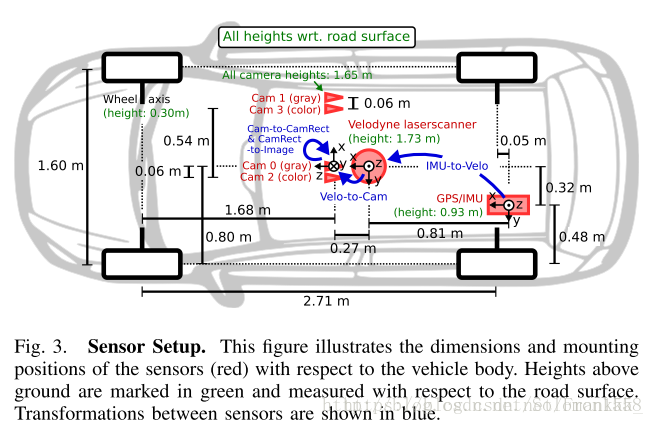

The installation positions of the sensors are shown in the figure below:

For the raw_data dataset we use, the data package (taking 2011_09_26_drive_0001, synced, +rectified data as an example) mainly includes the following parts after extraction:

- Calibration files: calib_cam_to_cam.txt, calib_imu_to_velo.txt, calib_velo_to_cam.txt

- Camera files: Four folders from image_00 to image_03, corresponding to 4 cameras, with camera 02 being commonly used

- Point cloud files: Files of points scanned by the radar, located in the velodyne_points folder, including multiple bin files and timestamp files

Code Implementation

The KITTI dataset provides an official toolkit to facilitate operations on the dataset. For the raw_data, it offers more than a dozen functions to make operations easier. A demo of data fusion is provided as follows:

function run_demoVelodyne (base_dir,calib_dir)

% clear and close everything

close all; dbstop error; clc;

disp('======= KITTI DevKit Demo =======');

% Set according to different input parameters of the function

if nargin<1

base_dir = './data/2011_09_26/2011_09_26_drive_0005_sync';

end

if nargin<2

calib_dir = './data/2011_09_26';

end

cam = 2; % 0-based index

frame = 137; % 0-based index

% Load calibration files, fullfile combines paths and files

% calib is a structure matrix of camera parameters

calib = loadCalibrationCamToCam(fullfile(calib_dir,'calib_cam_to_cam.txt'));

% Tr_velo_to_cam is a parameter matrix

Tr_velo_to_cam = loadCalibrationRigid(fullfile(calib_dir,'calib_velo_to_cam.txt'));

% Calculate the projection matrix from the radar's 3D data to the camera image

R_cam_to_rect = eye(4);

R_cam_to_rect(1:3,1:3) = calib.R_rect{1};

P_velo_to_img = calib.P_rect{cam+1}*R_cam_to_rect*Tr_velo_to_cam;

% Load and display image

img = imread(sprintf('%s/image_%02d/data/%010d.png',base_dir,cam,frame));

fig = figure('Position',[20 100 size(img,2) size(img,1)]);

axes('Position',[0 0 1 1]);

imshow(img); hold on;

% Load velodyne points

fid=fopen(sprintf('%s/velodyne_points/data/%010d.bin',base_dir,frame),'rb');% Open in binary mode

velo = fread(fid,[inf 4],'single');% Read the file in single format, dimension (n,4)

velo = velo(1:5:end,:);% Take one point every five points for easier display

fclose(fid);

% Remove all points behind image plane (approximation)

idx = velo(:,1)<5; % Find indices of radar points with x-coordinate less than 5

velo(idx,:) = []; % Remove these points

% Project to image plane (exclude luminance)

velo_img = project(velo(:,1:3),P_velo_to_img);

% Plot points, colored

cols = jet;

for i=1:size(velo_img,1)

col_idx = round(64*5/velo(i,1));

plot(velo_img(i,1),velo_img(i,2),'o','LineWidth',4,'MarkerSize',1,'Color',cols(col_idx,:));

end

% Plot points, grayscale

% cols = 1-[0:1/63:1]'*ones(1,3);

% for i=1:size(velo_img,1)

% col_idx = round(64*5/velo(i,1));

% plot(velo_img(i,1),velo_img(i,2),'o','LineWidth',4,'MarkerSize',1,'Color',cols(col_idx,:));

% end

The final result is shown below: